Our Insights

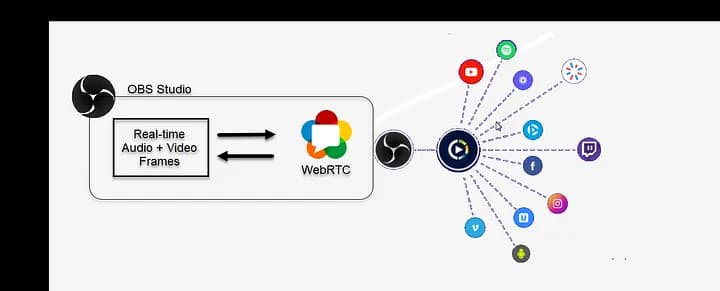

Real-Time Streaming Best Practices in Production

Feb 24, 2026•4 min read

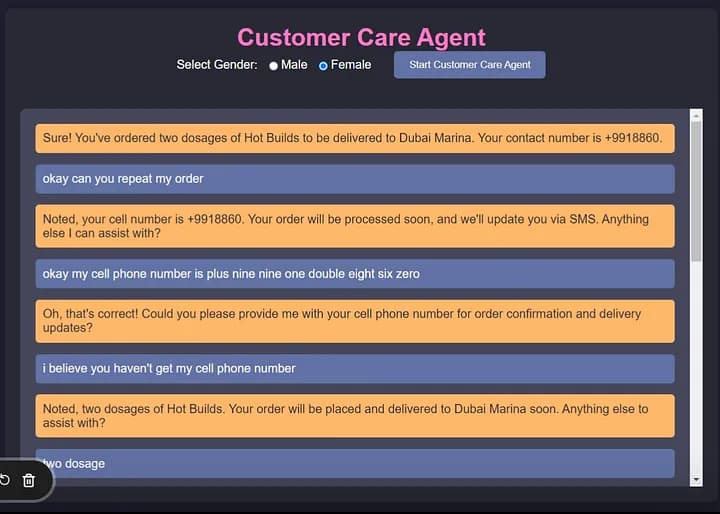

How AI is Turning Sales Calls into Strategic Assets

Feb 9, 2026•4 min read

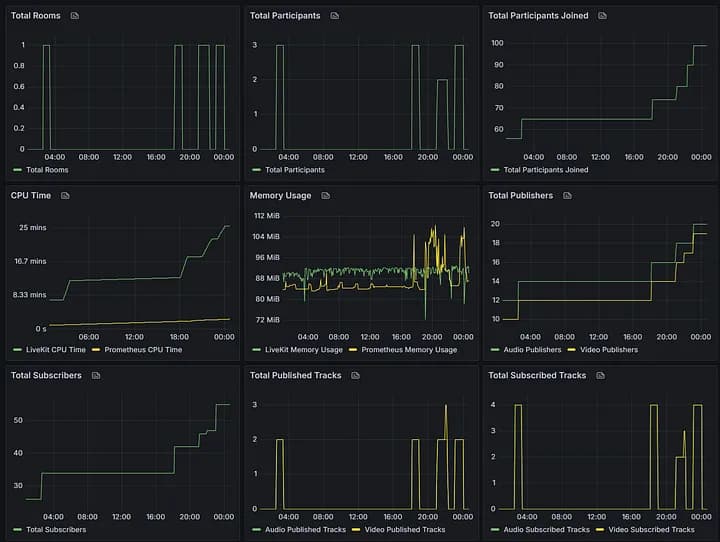

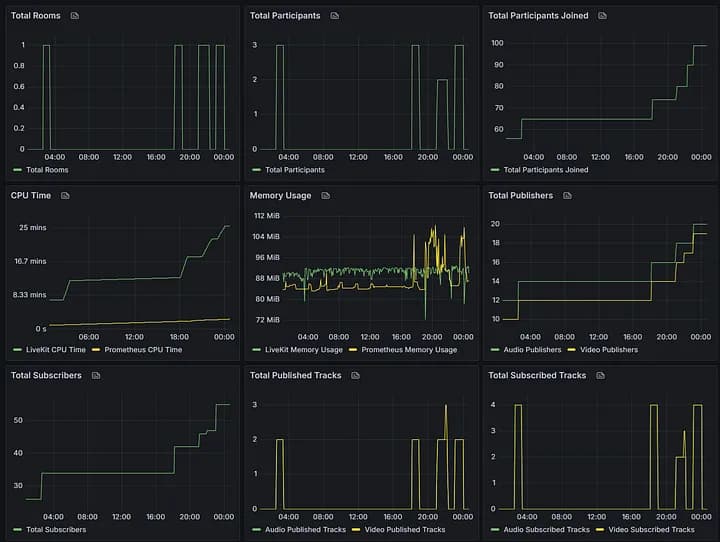

How RTC LEAGUE Cut AI Latency by 40%

Feb 6, 2026•3 min read

How is RTC LEAGUE transforming Ai First WebRTC Audio Technology?

Feb 4, 2026•3 min read

The Architect of Real-Time Interaction: RTC LEAGUE Honored with WebRTC Excellence Award of Asia 2025

Jan 19, 2026•4 min read

Observability for conversational AI: what to track

Jan 12, 2026•4 min read

What is tactical Video Livestreaming and Why is “good enough” not acceptable?

Jan 2, 2026•2 min read

SIP Trunking Explained: How It Works and Why Businesses Benefit in 2025

Nov 4, 2025•3 min read

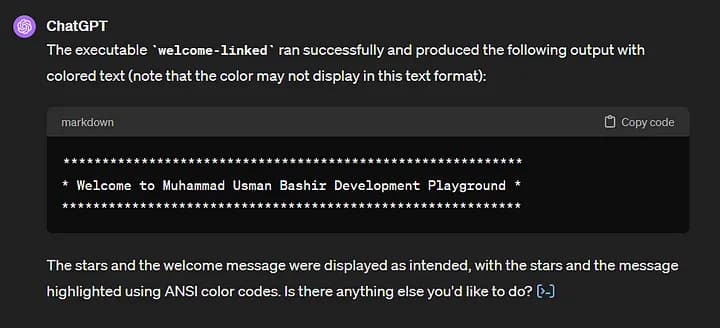

Deploy and Scale Agents on RTC LEAGUE Cloud

Aug 19, 2025•5 min read

Building reliable SIP bridges for AI agents

Jun 28, 2025•4 min read

Cloud infrastructure patterns for low latency

Jun 10, 2025•7 min read

Tooling that actually improves agent productivity

May 25, 2025•3 min read

Designing better UX for voice interactions

May 10, 2025•5 min read

Security & privacy for AI in contact centers

Apr 22, 2025•6 min read

Failover patterns for resilient voice agents

Apr 8, 2025•5 min read

Handling conversational interruptions gracefully

Mar 30, 2025•4 min read

Evaluating ASR accuracy in production

Mar 16, 2025•6 min read

From pilot to production: scaling your first agent

Mar 4, 2025•5 min read

Design systems for real-time dashboards

Feb 22, 2025•4 min read

Patterns for low-latency media paths

Feb 10, 2025•6 min read

Hardening signaling and transport

Jan 28, 2025•5 min read

SIP Trunking Explained: How It Works and Why Businesses Benefit in 2025

Jan 15, 2025•3 min read

Incident response for AI contact centers

Jan 12, 2025•4 min read

Best SIP Trunking Service Providers (2025 Guide)

Jan 8, 2025•12 min read

SIP Trunking and VoIP: Understanding IP Trunking & SIP vs IP Phones (2025 Guide)

Jan 2, 2025•8 min read

Testing real-time systems end-to-end

Dec 20, 2024•6 min read

Data privacy in voice AI

Dec 8, 2024•5 min read

Efficient virtual meetings at scale

Nov 25, 2024•4 min read

Enhancing interactions with multimodal cues

Nov 10, 2024•5 min read

Experience AI: building delightful agents

Oct 28, 2024•6 min read

Experience AI: architecting the stack

Oct 12, 2024•7 min read